We all know that OSB supports JCA protocol.In this post I will discuss one issue that we encountered when we implemented FTP poll with JCA in OSB in soa/osb clustered environment

Setup

Error in osb log

<Error> <JCA_FRAMEWORK_AND_ADAPTER> <osb01> <[ACTIVE] ExecuteThread: '145' for queue: 'weblogic.kernel.Default (self-tuning)'> <097b277aaf0a827d:40ef1fa1:142b5024552:-8000-0000000000079fde> <1386802596992> <BEA-000000>Unable to create clustered resource for inbound.

Unable to create clustered resource for inbound.

Unable to create clustered resource for inbound.

Please make sure the database connection parameters are correct.

[Caused by: Unable to resolve 'jdbc.SOADataSource'. Resolved 'jdbc']>

<Error> <JCATransport> <osb01> <[ACTIVE] ExecuteThread: '145' for queue: 'weblogic.kernel.Default (self-tuning)'> <pravva01> <> <097b277aaf0a827d:40ef1fa1:142b5024552:-8000-0000000000079fde> <1386802596993> <BEA-381959> <Failed to activate JCABindingService for wsdl: servicebus:/WSDL, operation: Get, exception: BINDING.JCA-12600

Generic error.

Generic error.

Cause: {0}.

Please create a Service Request with Oracle Support.

BINDING.JCA-12600

Generic error.

Generic error.

Cause: {0}.

Please create a Service Request with Oracle Support.

at oracle.tip.adapter.sa.impl.inbound.JCABindingActivationAgent.activateEndpoint(JCABindingActivationAgent.java:340)

at oracle.tip.adapter.sa.impl.JCABindingServiceImpl.activate(JCABindingServiceImpl.java:113)

at com.bea.wli.sb.transports.jca.binding.JCATransportInboundOperationBindingServiceImpl.activateService(JCATransportInboundOperationBindingServiceImpl

Solution

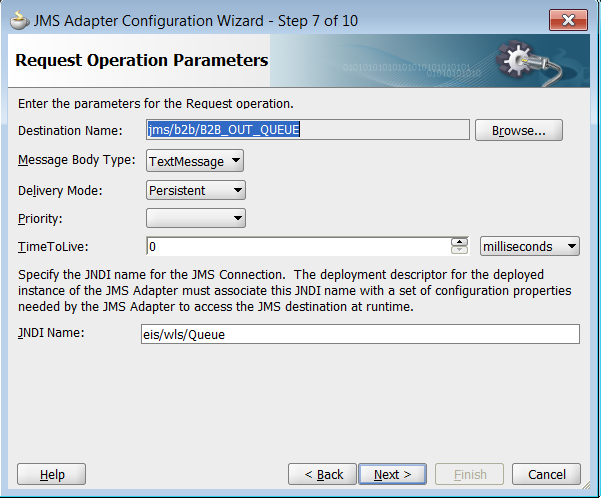

As highlighted in color 'jdbc/SOADataSource' is one of parameters configured on JCA adapter connection factory and it is mandatory parameter.This data source is available with installation and points to soa dehydration store schema SOAINFRA where high availability features are installed

By default this data source is targeted to soa cluster but in our case it is OSB which is using JCA framework.When OSB access configured FTP JNDI connection factory which is configured with 'jdbc/SOADataSource', this data source could not be looked up since it is not being targeted to OSB cluster

So we need to target data source to osb cluster in addition to soa cluster and bounce OSB managed servers.This would resolve the issue

Setup

- Created JCA FTP deployment connection factory with HA parameters

- Created FTP adapter in Jdeveloper

- Imported required artifacts from Jdev project into OSB

- Created OSB proxy with JCA

Error in osb log

<Error> <JCA_FRAMEWORK_AND_ADAPTER> <osb01> <[ACTIVE] ExecuteThread: '145' for queue: 'weblogic.kernel.Default (self-tuning)'> <097b277aaf0a827d:40ef1fa1:142b5024552:-8000-0000000000079fde> <1386802596992> <BEA-000000>

Unable to create clustered resource for inbound.

Unable to create clustered resource for inbound.

Please make sure the database connection parameters are correct.

[Caused by: Unable to resolve 'jdbc.SOADataSource'. Resolved 'jdbc']>

<Error> <JCATransport> <osb01> <[ACTIVE] ExecuteThread: '145' for queue: 'weblogic.kernel.Default (self-tuning)'> <pravva01> <> <097b277aaf0a827d:40ef1fa1:142b5024552:-8000-0000000000079fde> <1386802596993> <BEA-381959> <Failed to activate JCABindingService for wsdl: servicebus:/WSDL, operation: Get, exception: BINDING.JCA-12600

Generic error.

Generic error.

Cause: {0}.

Please create a Service Request with Oracle Support.

BINDING.JCA-12600

Generic error.

Generic error.

Cause: {0}.

Please create a Service Request with Oracle Support.

at oracle.tip.adapter.sa.impl.inbound.JCABindingActivationAgent.activateEndpoint(JCABindingActivationAgent.java:340)

at oracle.tip.adapter.sa.impl.JCABindingServiceImpl.activate(JCABindingServiceImpl.java:113)

at com.bea.wli.sb.transports.jca.binding.JCATransportInboundOperationBindingServiceImpl.activateService(JCATransportInboundOperationBindingServiceImpl

Solution

As highlighted in color 'jdbc/SOADataSource' is one of parameters configured on JCA adapter connection factory and it is mandatory parameter.This data source is available with installation and points to soa dehydration store schema SOAINFRA where high availability features are installed

By default this data source is targeted to soa cluster but in our case it is OSB which is using JCA framework.When OSB access configured FTP JNDI connection factory which is configured with 'jdbc/SOADataSource', this data source could not be looked up since it is not being targeted to OSB cluster

So we need to target data source to osb cluster in addition to soa cluster and bounce OSB managed servers.This would resolve the issue